Sentiment Analysis is one of the most obvious things Data Analysts with unlabelled Text data (with no score or no rating) end up doing in an attempt to extract some insights out of it and the same Sentiment analysis is also one of the potential research areas for any NLP (Natural Language Processing) enthusiasts.

For an analyst, the same sentiment analysis is a pain in the neck because most of the primitive packages/libraries handling sentiment analysis perform a simple dictionary lookup and calculate a final composite score based on the number of occurrences of positive and negative words. But that often ends up in a lot of false positives, with a very obvious case being ‘happy’ vs ‘not happy’ – Negations, in general Valence Shifters.

Consider this sentence: ‘I am not very happy’. Any Primitive Sentiment Analysis Algorithm would just flag this sentence positive because of the word ‘happy’ that apparently would appear in the positive dictionary. But reading this sentence we know this is not a positive sentence.

While we could build our own way to handle these negations, there are couple of new R-packages that could do this with ease. One such package is sentimentr developed by Tyler Rinker.

Installing the package

sentimentr can be installed from CRAN or the development version can be installed from github.

install.packages('sentimentr')

#or

library(devtools)

install_github('trinker/sentimentr')

Copy

Why sentimentr?

The author of the package himself explaining what does sentimentr do that other packages don’t and why does it matter?

“sentimentr attempts to take into account valence shifters (i.e., negators, amplifiers (intensifiers), de-amplifiers (downtoners), and adversative conjunctions) while maintaining speed. Simply put, sentimentr is an augmented dictionary lookup. The next questions address why it matters.”

Sentiment Scoring:

sentimentr offers sentiment analysis with two functions: 1. sentiment_by() 2. sentiment()

Aggregated (Averaged) Sentiment Score for a given text with sentiment_by

sentiment_by('I am not very happy', by = NULL)

element_id sentence_id word_count sentiment

1: 1 1 5 -0.06708204

Copy

But this might not help much when we have multiple sentences with different polarity, hence sentence-level scoring with sentiment would help here.

sentiment('I am not very happy. He is very happy')

element_id sentence_id word_count sentiment

1: 1 1 5 -0.06708204

2: 1 2 4 0.67500000

Copy

Both the functions return a dataframe with four columns:

1. element_id – ID / Serial Number of the given text

2. sentence_id – ID / Serial Number of the sentence and this is equal to element_id in case of sentiment_by

3. word_count – Number of words in the given sentence

4. sentiment – Sentiment Score of the given sentence

Extract Sentiment Keywords

The extract_sentiment_terms() function helps us extract the keywords – both positive and negative that was part of the sentiment score calculation. sentimentr also supports pipe operator %>% which makes it easier to write multiple lines of code with less assignment and also cleaner code.

'My life has become terrible since I met you and lost money' %>% extract_sentiment_terms() element_id sentence_id negative positive 1: 1 1 terrible,lost money Copy

Sentiment Highlighting:

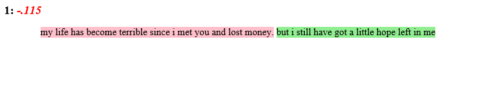

And finally, the highight() function coupled with sentiment_by() that gives a html output with parts of sentences nicely highlighted with green and red color to show its polarity. Trust me, This might seem trivial but it really helps while making Presentations to share the results, discuss False positives and to identify the room for improvements in the accuracy.

'My life has become terrible since I met you and lost money. But I still have got a little hope left in me' %>% sentiment_by(by = NULL) %>% highlight() Copy

Try using sentimentr for your sentiment analysis and text analytics project and do share your feedback in comments. Complete code used here is available on my github.