A while ago I was trying to make some analysis on a Medium publication for a personal project. However, data acquisition was a problem because only scraping the publication’s home page does not ensure you get all the data you want.

That’s when I find out that each publication has its own archive. You just have to type “/archive” after the publication URL. You can even specify a year, month, and day and find all the stories published on that date. Something like this:

https://publicationURL/archive/year/month/day

And suddenly the problem was solved. A very simple scraper would do the job. In this tutorial, we’ll see how to code a simple but powerful web scraper that can be used in any Medium publication. Also, the concept of this scraper can be used to scrape data from lots of different websites for whatever reason you want to.

As we’re scraping a Medium publication, nothing better than use The Startup for as an example. According to them, The Startup is the largest active Medium publication with over 700k followers and therefore it should be a great source of data. In this article, you’ll see how to scrape all the articles published by them in 2019 and how this data can be useful.

Web Scraping

Web scraping is the process of collecting data from websites using automatized scripts. It consists of three main steps: fetching the page, parsing the HTML, and extracting the information you need.

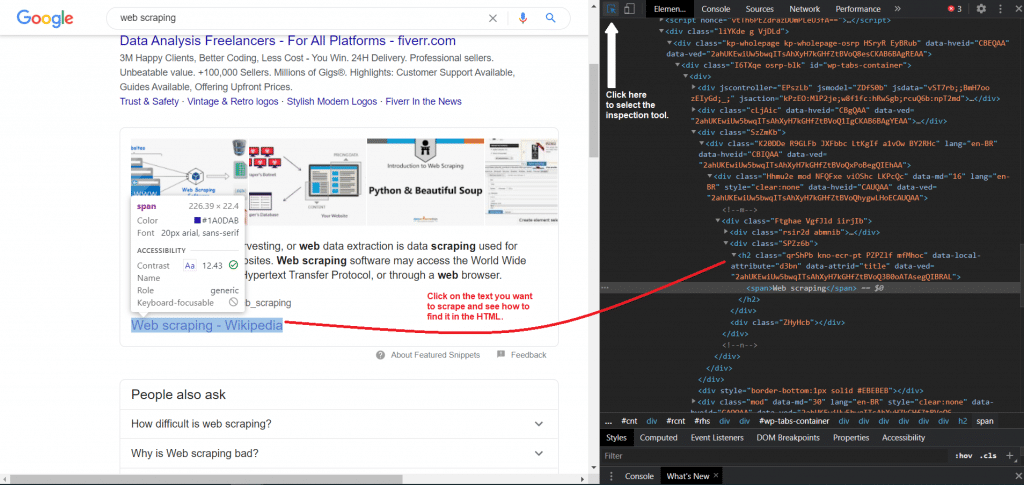

The third step is the one that can be a little tricky at first. It consists basically of finding the parts of the HTML the contain the information you want. You can find this by opening the page you want to scrape and pressing the F12 key on your keyboard. Then you can select an element of the page to inspect. You can see this in the image below.

Then all you need to do is to use the tags and classes in the HTML to inform the scraper where to find the information. You need to do it for every part of the page you want to scrape. You can see it better in the code.

The Code

As this is a simple scraper, we’ll only use requests, BeautifulSoup, and Pandas. Requests will be used to get the pages we need, while BeautifulSoup parses the HTML. Pandas we’ll be used to store the data in a DataFrame and then export as a .csv file. So we’ll begin importing these libraries and initializing an empty list to store the data. This list we’ll be fulfilled with other lists.

import pandas as pd from bs4 import BeautifulSoup import requests stories_data = []

As mentioned earlier, the Medium archive stores the stories by the data of publication. As we intend to scrape every story published in The Startup in 2019, we need to iterate over every day of every month of that year. We’ll use nested `for` loops to iterate over the months of the year and then over the days of each month. To do this, it is important to differentiate the number of days in each month. It’s also important to make sure all days and months are represented by two-digit numbers.

for month in range(1, 13):

if month in [1, 3, 5, 7, 8, 10, 12]:

n_days = 31

elif month in [4, 6, 9, 11]:

n_days = 30

else:

n_days = 28

for day in range(1, n_days + 1):

month, day = str(month), str(day)

if len(month) == 1:

month = f'0{month}'

if len(day) == 1:

day = f'0{day}'

And now the scraping begins. We can use the month and day do set up the date that will also be stored along with the scraped data and, of course, creates the URL for that specific day. We this is done, we can just use requests to get the page and parse the HTML with BeautifulSoup.

date = f'{month}/{day}/2019'

url = f'https://medium.com/swlh/archive/2019/{month}/{day}'

page = requests.get(url)

soup = BeautifulSoup(page.text, 'html.parser')

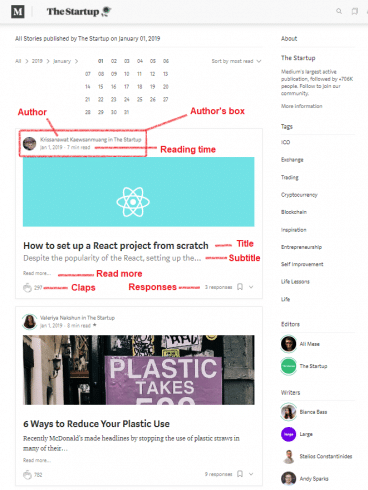

So this is The Startup’s archive page for January 1st, 2019. We can see that each story is stored in a container. What we need to do is to grab all these containers. For this, we’ll use the find_all method.

stories = soup.find_all('div', class_='streamItem streamItem--postPreview js-streamItem')

The above code generates a list containing all the story containers on the page. Something like this:

All we need to do now is to iterate over it and grab the information we want from each story. We’ll scrape:

We’ll first select a box inside the container that I call the author’s box. From this box, we’ll extract the author’s URL and the reading time. And here is our only condition in this scraper: if the container does not show a reading time, we’ll not scrape this story and move to the next one. This is because such stories contain only images and one or two lines of text. We’re not interested in that as we can think of it as outliers. We’ll use the try and except blocks to handle that.

Other than that, we must be prepared for a story not having a title or a subtitle (yes, that happens) and, of course, not having claps or responses. The if clause we’ll do the job of preventing an error from being raised in such situations.

All this scraped information will later be appended to the each_story list that is initialized in the loop. This is the code for all this:

for story in stories:

each_story = []

author_box = story.find('div', class_='postMetaInline u-floatLeft u-sm-maxWidthFullWidth')

author_url = author_box.find('a')['href']

try:

reading_time = author_box.find('span', class_='readingTime')['title']

except:

continue

title = story.find('h3').text if story.find('h3') else '-'

subtitle = story.find('h4').text if story.find('h4') else '-'

if story.find('button', class_='button button--chromeless u-baseColor--buttonNormal'

' js-multirecommendCountButton u-disablePointerEvents'):

claps = story.find('button', class_='button button--chromeless u-baseColor--buttonNormal'

' js-multirecommendCountButton u-disablePointerEvents').text

else:

claps = 0

if story.find('a', class_='button button--chromeless u-baseColor--buttonNormal'):

responses = story.find('a', class_='button button--chromeless u-baseColor--buttonNormal').text

else:

responses = '0 responses'

story_url = story.find('a', class_='button button--smaller button--chromeless u-baseColor--buttonNormal')[

'href']

Cleaning some data

Before we move to scrape the text of the story let’s first do a little cleaning in the reading_time and responses data. Instead of storing these variables as “5 min read” and “5 responses”, we’ll keep only the numbers. These two lines of code will have this done:

reading_time = reading_time.split()[0] responses = responses.split()[0]

Back to scraping…

We’ll no scrape the article page. We’ll use requests once more to get the story_url page and BeautifulSoup to parse the HTML. From the article page, we need to find all the section tags, which are where the text of the article is. We’ll also initialize two new lists, one to store the article’s paragraphs and the other to store the title of each section in the article.

story_page = requests.get(story_url)

story_soup = BeautifulSoup(story_page.text, 'html.parser')

sections = story_soup.find_all('section')

story_paragraphs = []

section_titles = []

And now we only need to loop through the sections and for each section, we will:

for section in sections:

paragraphs = section.find_all('p')

for paragraph in paragraphs:

story_paragraphs.append(paragraph.text)

subs = section.find_all('h1')

for sub in subs:

section_titles.append(sub.text)

number_sections = len(section_titles)

number_paragraphs = len(story_paragraphs)

This will significantly increase the time to scrape everything, but will also make the final dataset much more valuable.

Storing and exporting the data

The scraping is now finished. Everything will now be appended to the each_story list, which will be appended to the stories_data list.

each_story.append(date) each_story.append(title) each_story.append(subtitle) each_story.append(claps) each_story.append(responses) each_story.append(author_url) each_story.append(story_url) each_story.append(reading_time) each_story.append(number_sections) each_story.append(section_titles) each_story.append(number_paragraphs) each_story.append(story_paragraphs) stories_data.append(each_story)

As stories_data is now a list of lists, we can easily transform it into a DataFrame and then export the DataFrame to a .csv file. For this last step, as we have a lot of text data, it’s recommended to set the separator as ‘\t’.

columns = ['date', 'title', 'subtitle', 'claps', 'responses',

'author_url', 'story_url', 'reading_time (mins)',

'number_sections', 'section_titles', 'number_paragraphs', 'paragraphs']

df = pd.DataFrame(stories_data, columns=columns)

df.to_csv('1.csv', sep='\t', index=False)

The Data

This is how the data looks like:

As you can see, we have scraped data from 21,616 Medium articles. That’s a lot! That actually means our scraper accessed almost 22 thousand Medium pages. In fact, considering one archive page for each day of the year, we just accessed (21,616 + 365 =) 21,981 pages!

This huge amount of requests we made can be a problem, though. The website we’re scraping can realize the interactions are not being made by a human and we easily have our IP blocked. There are some workarounds to fix this. One solution is to insert small pauses in your code, to make the interactions with the server more human. We can use the randint function from NumPy and the sleep function to achieve this:

# Import this import numpy as np from time import sleep # Put several of this line in different places around the code sleep(np.random.randint(1, 15))

This code will randomly choose a number of seconds from 1 to 15 for the scraper to pause.

If you’re scraping too much data, however, even these pauses may not be enough. In this case, you can either develop your own infrastructure of IP addresses or maybe, if you want to keep it simple, you can get in touch with a proxy provider, such as Infatica or others and they will deal with this problem for you by constantly changing your IP address so you do not get blocked.

But what to do with this data?

That’s something you might be asking yourself. Well, there’s always a lot to learn from data. We can perform some analysis to answer some simple question such as:

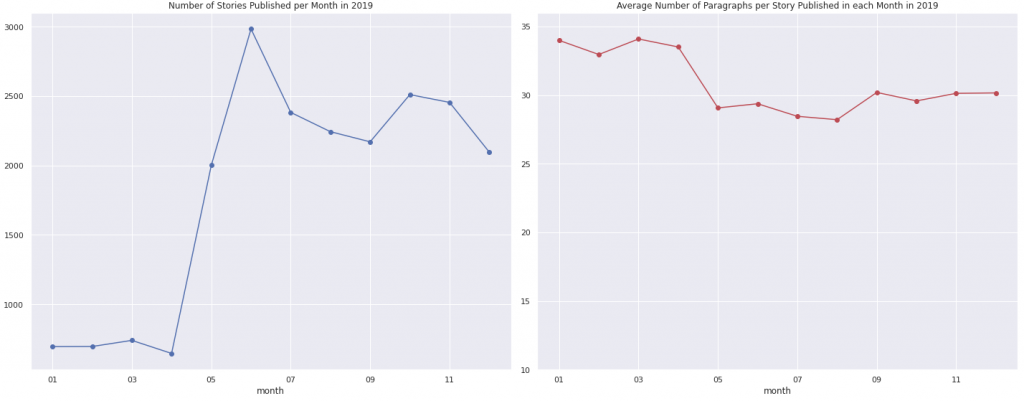

The charts below can help with those. Notice how the number of stories published per month skyrocketed in the second half of 2019. Also, the stories became around five paragraphs shorter, on average, throughout the year. And I’m talking paragraphs, but one could look for the average number of words or even characters per story.

And of course, there’s Natural Language Processing - NLP. Yes, we have a lot of text data that we can use for NLP. It is possible to analyze the kind of stories that are usually published in The Startup, to investigate what makes a story receive more or fewer claps or even to predict the number of claps and responses a new publication may receive.

But yes, there is a lot to do with the data, but don’t miss the point here. This article is about the scraper, not the scraped data. The main goal here is to share how powerful of a tool it can be.

Also, this same concept of web scraping can be used to perform a lot of different activities. For example, you can scrape Amazon a keep track of prices, or you can build a dataset of job opportunities by scraping a job search website if you are looking for a job. The possibilities are endless, so it’s up to you!

If you liked this in think it may be useful to you, you can find the complete code here. If you have any questions, suggestions, or just want to be in touch, feel free to contact through Twitter or Linkedin.